World's Slowest Raytracer

I have not talked about raytracing in RADV for a while, but after some procrastination being focused on some other things I recently got back to it and achieved my next milestone.

In particular I have been hacking away at CTS and got to a point where CTS on dEQP-VK.ray_tracing.* runs to completion without crashes or hangs. Furthermore, I got the passrate to 90% of non-skiped tests. So we’re finally getting somewhere close to usable.

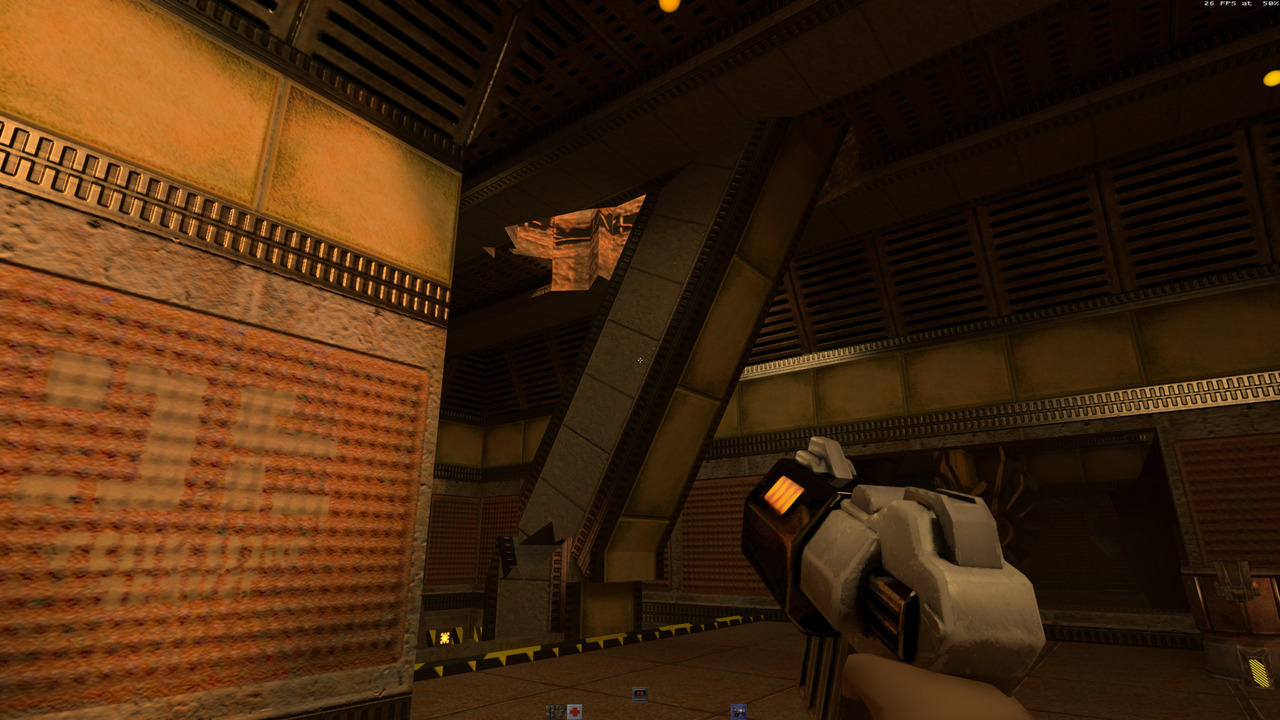

As further show that it is usable my fixes for CTS also fixed the corruption issues in Quake 2 RTX (Github version), delivering this image:

Of course not everything is perfect yet. Besides the not 100% CTS passrate it has like half the Windows performance at 4k right now and we still have some feature gaps to make it really usable for most games.

Why is it slow?

TL;DR Because I haven’t optimized it yet and implemented every shortcut imaginable.

AMD raytracing primer

Raytracing with Vulkan works with two steps:

- You built a giant acceleration structure that contains all your geometry. Typically this ends up being some kind of tree, typically a Bounding Volume Hierarchy (BVH).

- Then you trace rays using some traversal shader through the acceleration structure you just built.

With RDNA2 AMD started accelerating this by adding an instruction that allowed doing intersection tests between a ray and a single BVH node, where the BVH node can either be

- A triangle

- A box node specifying 4 AABB boxes

Of course this isn’t quite enough to deal with all geometry types in Vulkan so we also add two more:

- an AABB box

- an instance of another BVH combined with a transformation matrix

Building the BVH

With a search tree like a BVH it is very possibly to make trees that are very useless. As an example consider a binary search tree that is very unbalanced. We can have similarly bad things with a BVH including making it unbalanced or having overlapping bounding volumes.

And my implementation is the simplest thing possible: the input geometry becomes the leaves in exactly the same order and then internal nodes are created just as you’d draw them. That is probably decently fast in building the BVH but surely results in a terrible BVH to actually use.

BVH traversal

After we built a BVH we can start tracing some rays. In rough pseudocode the current implementation is

stack = empty

insert root node into stack

while stack is not empty:

node = pop a node from the stack

if we left the bottom level BVH:

reset ray origin/direction to initial origin/direction

result = amd_intersect(ray, node)

switch node type:

triangle:

if result is a hit:

load some node data

process hit

box node:

for each box hit:

push child node on stack

custom node 1 (instance):

load node data

push the root node of the bottom BVH on the stack

apply transformation matrix to ray origin/direction

custom node 2 (AABB geometry):

load node data

process hit

We already knew there were inherently going to be some difficulties:

- We have a poor BVH so we’re going to do way more iterations than needed.

- Calling shaders as a result of hits is going to result in some divergence.

Furthermore this also clearly shows some difficulties with how we approached the intersection instruction. Some advantages of the intersection instruction are that it avoids divergence in computing collisions if we have different node types in a subgroup and to be cheaper when there are only a few lanes active. (A single CU can process one ray/node intersection per cycle, modulo memory latency, while it can process an ALU instruction on 64 lanes per cycle).

However even if it avoids the divergence in the collision computation we still introduce a ton of divergence in the processing of the results of the intersection. So we are still doing pretty bad here.

A fast GPU traversal stack needs some work too

Another thing to be noted is our traversal stack size. According to the Vulkan specification a bottom level acceleration structure should support 2^24 -1 triangles and a top level acceleration structure should support 2^24 - 1 bottom level structures. Combined with a tree with 4 children in each internal node we can end up with a tree depth of about 24 levels.

In each internal node iteration of our loop we pop one element and push up to 4 elements, so at the deepest level of traversal we could end up with a 72 entry stack. Assuming these are 32-bit node identifiers, that ends up with 288 bytes of stack per lane, or ~18 KiB per 64 lane workgroup (the minimum which could possibly keep a CU busy with an ALU only workload). Given that we have 64 KiB of LDS (yes I am using LDS since there is no divergent dynamic register addressing) per CU that leaves only 3 workgroups per CU, leaving very little options for parallelism between different hardware execution units (e.g. the ALU and the texture unit that executes the ray intersections) or latency hiding of memory operations.

So ideally we get this stack size down significantly.

Where do we go next?

First step is to get CTS passing and getting an initial merge request into upstream Mesa. As a follow on to that I’d like to get a minimal prototype going for some DXR 1.0 games with vkd3d-proton just to make sure we have the right feature coverage.

After that we’ll have to do all the traversal optimizations. I’ll probably implement a bunch of instrumentation so I actually have a clue on what to optimize. This is where having some runnable games really helps get the right idea about performance bottlenecks.

Finally, with some luck better shaders to build a BVH will materialize as well.